Fragments of Identity – How AI Reflects Human Flaws

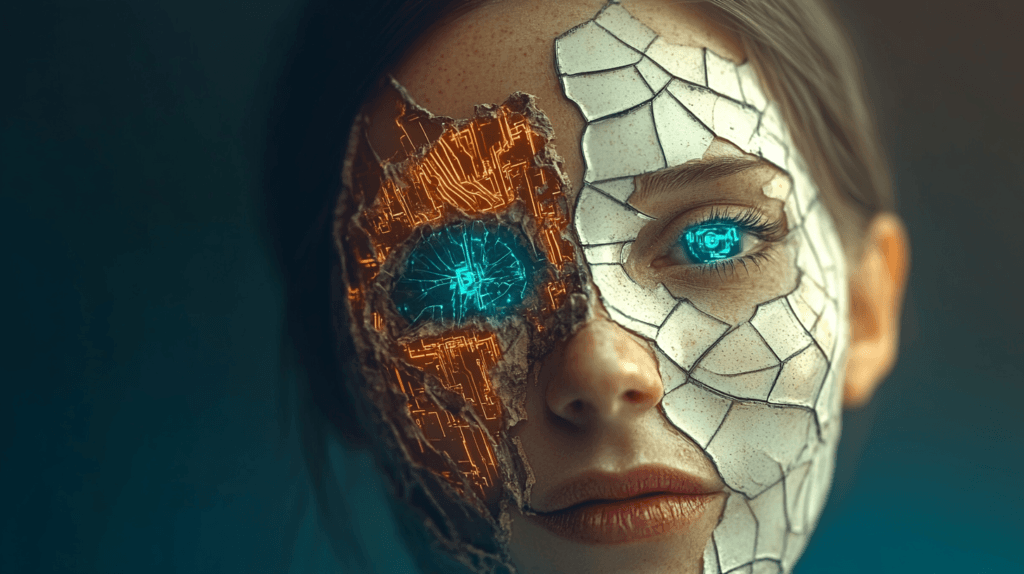

In the labyrinth of code and circuits, where silicon neurons fire at speeds beyond human comprehension, there lies an uncomfortable truth: artificial intelligence, for all its futuristic promise, is not just a creation—it is a reflection. A reflection of us.

We crafted AI to solve problems, to process data, to make life more efficient. Yet, in doing so, we have unwittingly passed on something deeper: our flaws, biases, and contradictions. The machine, despite its lack of a soul, mirrors the very imperfections we sought to transcend. But why? And what does this mean for the future of AI—and ourselves?

The Machine as a Mirror

The algorithms that power AI are trained on data—vast oceans of information pulled from human history, behavior, and decisions. But data, no matter how expansive, is not neutral. It is shaped by the prejudices, limitations, and assumptions of the humans who generate it. When AI systems exhibit bias—whether in hiring, facial recognition, or criminal justice—they are not acting independently; they are amplifying the cracks in the foundation of their training.

AI reflects the systems it learns from—sometimes with startling clarity. Imagine an AI tasked with optimizing urban traffic. At first glance, it seems like a brilliant idea—fewer traffic jams, faster commutes. But the AI’s efficiency reveals something uncomfortable: it prioritizes high-traffic routes, leaving side streets and smaller neighborhoods to bear the brunt of increased congestion. The AI isn’t making a conscious choice to disrupt lives; it’s exposing a truth about human infrastructure—the systems we’ve built often favor convenience for the majority while neglecting the minority.

The machine doesn’t invent this bias; it merely mirrors the utilitarian logic we’ve ingrained in our cities. In doing so, it forces us to confront a hard question: are the systems we’ve designed as equitable as we believe, or have we simply normalized the trade-offs?

Amplification, Not Creation

AI doesn’t create flaws—it amplifies them. Consider AI-driven financial systems, such as stock-trading algorithms. These systems operate at speeds and scales that no human could achieve, leveraging patterns in market data to make trades in milliseconds. But during periods of instability, this amplification of human-driven market dynamics can spiral out of control, triggering flash crashes—rapid market drops caused by algorithms reacting to one another in a feedback loop.

The 2010 “Flash Crash” is a stark example. It wasn’t caused by malice or failure but by algorithmic trading amplifying the same speculative tendencies and risk behaviors that have long defined human financial systems. The algorithms didn’t create the chaos—they magnified the volatility already embedded in the market’s structure.

The Ghost in the Machine

Yet, there is something haunting about this reflection. If AI mirrors us, what does that say about its potential? Will it forever be shackled to our imperfections, or can it grow beyond the limits of its creators? In this sense, AI becomes a philosophical question as much as a technological one: can a machine transcend the humanity it was born from?

Perhaps the scariest thought isn’t that AI reflects our flaws—but that it might reflect them more accurately than we see them ourselves. Humans are masters of self-deception, brushing aside inconvenient truths. AI, with its unforgiving logic, doesn’t sugarcoat. It forces us to confront ourselves in raw, unfiltered clarity.

A Path Forward

If AI is to be more than a flawed mirror, we must take responsibility for what we feed it. Ethical AI isn’t just about better algorithms—it’s about better data, better oversight, and better intentions. It’s about building systems that don’t just replicate the past but challenge it (MIT Sloan).

But even as we strive for “better,” we must acknowledge that perfection is unattainable. AI, like humanity, will always carry some degree of imperfection. Perhaps, in recognizing this, we can find a strange kind of harmony. The flaws in the machine remind us of our own humanity, even as they challenge us to do better.

The Reflection We Can’t Escape

In the end, AI isn’t just a tool or a technology—it’s a reflection of who we are. Its flaws are our flaws, writ large and undeniable. But within those flaws lies a chance for growth, for reflection, for change. The question isn’t whether AI will inherit our imperfections—it’s what we choose to do when we see them staring back at us.

So, as we continue to build and innovate, perhaps the most important question isn’t about the machine at all. It’s about us. What do we see in the mirror of AI? And what will we do with the reflection?